|

Program: The Enough Abuse Campaign. Organization: The Massachusetts Child Sexual Abuse Prevention Partnership. Type of Evaluation: Statewide program inventory; pre- and post-campaign Random-Digit-Dial (RDD) telephone polls, four years apart; post-training evaluation surveys completed by trainees; participatory community-based evaluation using the Community Toolbox method and its Online Documentation and Support System. Sample Sizes: Approximately 800 citizens polled by each RDD poll; post-training evaluations completed by more than 5,000 trainers and trainees; data input into the documentation system by staff members of the 26 partnership organizations. Evaluation Period: Five years, 2002-2007. Cost: $1.2 million. Funded By: The U.S. Centers for Disease Control, with in-kind contributions from partnership members. |

Public health campaigns aimed at preventing everything from drug abuse to childhood obesity all have something in common: They are notoriously difficult to evaluate.

Researchers say it’s often impossible to make a scientific determination about the cause-and-effect connection between campaign messages – such as “Parents: The Anti-Drug” – and changes in specific outcomes, such as the number of youth who smoked pot last month. (See “Uh-Oh: What to do When a Study Shows Nothing?” October 2006.)

In 2002, the U.S. Centers for Disease Control (CDC) sought for the first time to address the issue of child sexual abuse using a public health strategy. It awarded three grants to groups in Georgia, Minnesota and Massachusetts, which set out to address abuse by building adult and community responsibility for prevention.

One of those groups was the Massachusetts Child Sexual Abuse Prevention Partnership, a collaboration of 26 public and private agencies, advocacy groups and practitioners spearheaded by the nonprofit Massachusetts Citizens for Children.

“At the time, CDC felt they had no evidence that [educating children about abuse] was really working. We had come to the same conclusion,” said Executive Director Jetta Bernier. “They said, ‘We need a major paradigm shift.’ ”

With that in mind, when the partnership launched its Enough Abuse Campaign in 2003, it turned to the Community Toolbox, a community-based participatory evaluation method that places a high value on documenting, sharing and learning from both the intermediate and final outcomes of social interventions.

The toolbox, a product of the University of Kansas’ Working Group for Community Health and Development, is available for free at http://ctb.ku.edu/en.

“We never had that sense that in five years, we were going to somehow prevent child sexual abuse, at least not in a measurable way,” Bernier said.

“That’s the beauty of the Community Toolbox experience. It helped with the conceptual framework of how to create social change when you’re dealing with a public health problem. It showed us that we’re on the right road.”

Is “the right road” the best evaluation outcome possible after five years and $1.2 million?

What to Measure

When you’re talking about public health, looking for numbers that go up or down isn’t always the best – or only – measure of a program’s effectiveness, researchers say.

“From my experience studying prevention programs, setting the bar for actual change in incidents is setting it very high for these programs … especially for something like child sexual abuse,” said Beth Molnar, an assistant professor at Harvard’s School of Public Health, who has been awarded a five-year federal grant to evaluate effective child abuse prevention programs.

Instead, the Massachusetts project took the approach of raising awareness about child sexual abuse. In 2005, Rodney Hammond, director of the CDC’s Division of Violence Prevention, credited the partnership’s Enough Abuse Campaign with making Massachusetts “one of the first states in the nation to lead a trail blazing effort … by building a movement of concerned citizens, community by community.”

Can that be quantified?

Yes, Bernier said, by using the Community Toolbox’s Online Documentation and Support System (ODSS), an Internet-based recording, measurement and reporting system.

For a fee, University of Kansas researchers trained the partnership members to record into ODSS any social changes initiated by their programs, with changes defined in public health terms as any new or modified programs, policies or practices related to the campaign’s goal.

By analyzing those initiatives over time, partnership members and evaluators were able to track and analyze social changes by various factors – such as the sector in which the changes occurred – and figure out which factors were associated with increases and decreases in the rate of overall change.

“As we were developing activities, we were taught how to describe them, how to code them,” Bernier said.

All campaign-related events were eventually coded into the system, including such things as the development of the campaign’s logic model, the selection of three pilot communities, community training dates and outreach to specific organizations.

When the partnership “finally pressed the button to see what the data looked like,” 139 distinctly new or modified programs, policies or practices had been entered into the ODSS, Bernier said.

Presented in graph form, the line of changes that had occurred rockets from the bottom left corner of the graph (the beginning of the campaign) to the top right corner.

“It’s no one program or policy or practice or any change that’s really going to get at the prevention of child sexual abuse,” said Dan Schober, a graduate research assistant at the University of Kansas, and one of the ODSS evaluators. “It’s really the accumulation of a lot of things.”

Referring to the graph, he said, “This line represents that.”

Over the course of the campaign, evaluators and partnership members met regularly to review qualitative information surrounding “critical” events associated with steep increases or stalls in overall community changes. For example, after a second statewide conference, reviewers were able to see that the subsequent outreach to new communities significantly increased the number activities coded into the ODSS.

Realistic Outcomes

The partnership gathered more traditional hard data, as well. As required by its CDC funding, the partnership conducted an initial inventory of the number of primary prevention programs run by the state’s public agencies. It also commissioned telephone polls in 2002 and 2007 that determined baseline changes in public perception of the prevalence of child sexual abuse and how to prevent it.

Viewed together in the campaign’s literature, the ODSS, random-digit dial polls and post-training evaluation data paint a solid picture of success that includes:

• Two state conferences and several community forums.

• A website, www.enoughabuse.org, and a recognizable campaign logo and media brand.

• The repeal of the Massachusetts statute of limitations in criminal child sexual abuse cases.

• An increase, from 70 percent to 93 percent, in the share of polled citizens who said that adults and communities (rather than children) should take prime responsibility for preventing child sexual abuse. The share of citizens who indicated they would be willing to participate in local training rose from 48 percent to 64 percent.

• The establishment of three permanent local abuse prevention coalitions in the communities of North Quabbin, Newton and Gloucester, Mass. Other communities have contacted the partnership about joining.

• Partnership-wide commitment to identify and shift resources to best practices across all program areas, from engaging key officials to selecting criteria for local trainers.

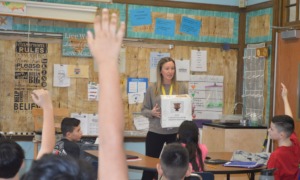

• The education of more than 75 local trainers, who have now trained more than 5,000 parents, professionals and other citizens. More than nine in 10 participants said the training taught them to identify problem or abusive behaviors in adults, to assess unhealthy sexual behaviors in children and what to do if they suspect someone is sexually abusing a child.

“I think they’ve done a wonderful job, especially with the community-level nature of the work they did,” said Molnar, the Harvard researcher.

“To me, that’s a more realistic outcome to study than to depend solely on whether new cases of child abuse and neglect go down.”