|

|

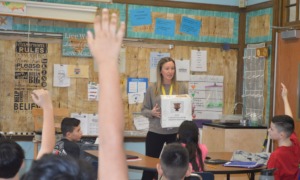

Rogers: “This is very much directing our programming.” Photo: Sherwood Forest Camp |

Evaluations don’t have to be rigid and stodgy in order to yield valuable and informative data for youth-serving programs. Much can be learned from evaluations that are flexible, easy, and even fun.

Who better to prove that than the American Camp Association (ACA), the membership and accreditation organization for hundreds of the nation’s residential and day camps?

In 2005, in response to the ever-increasing push for evidence-based practices, ACA released what it billed as the first “scientific” evidence that going to camp produces positive developmental outcomes for youth. (See “The Benefits of Camp,” May 2005.)

Those findings were based on more than 5,000 pre-camp and post-camp surveys (the “Camper Growth Index or CGI) of campers ages 8 to 14. The surveys measured the effects of going to camp on 10 outcomes, including self-esteem, independence, friendship skills, adventure and values.

More than 80 ACA-accredited camps took part, and it whetted the appetites of camp directors nationwide for more.

“That’s how the second set of modular outcome surveys got started,” said Deborah Bialeschki, ACA’s senior researcher. “They wanted something that was very simple and easy to use that [camp] staff could help do without a lot of investment in training.”

While the new tool is not a longitudinal, random-controlled study, some camps have found it useful in evaluating and shaping their work.

Modular Nuts and Bolts

With cobbled-together funding, ACA secured the services of two University of Utah professors who were willing to do “a lot of work for not much money,” Bialeschki said.

Although the CGI had incorporated 10 outcomes, ACA found it only had enough money to develop questionnaires to measure seven of them. They chose: friendship skills, independence, teamwork, family citizenship, perceived competence, interest in exploration and responsibility.

“We picked … the things around youth engagement, which programs often struggle with,” Bialeschki said.

They ended up with three highly flexible survey modules:

• Camper Learning Survey: Designed for 6- to 9-year-olds, this survey asks two questions (called “indicators”) for each of the seven outcomes. Younger campers circle their answers on a four-point Likert scale ranging from “I didn’t learn anything about this” to “I learned a lot about this.” One of the indicators is: “At camp, did you learn how to be better at making friends?”

“The little kids like it. They think it’s really cool that they’re like the big kids, with forms to fill out,” Bialeschki said.

Staffers require only brief training on how to assist young respondents.

“We scripted out things for staff members to say,” Bialeschki said. “Some of these staff are very young themselves.”

• Basic Camp Outcomes Survey: Designed for 10- to 17-year-olds, this survey consists of one to seven outcome questionnaires mixed and matched to measure each camp’s intentional efforts. Each questionnaire consists of six to 14 indicators, some with more than one part. Campers circle answers on a five-point Likert scale ranging from “decreased” to “increased a lot, I am sure.”

One sample indicator: “How much, if any, has your experience as a camper in this camp changed you in each of the following ways? 1. Placing group goals above the things that I want. 2. Working well with others.”

• Detailed Camp Outcomes Survey: Designed for 10- to 17-year olds, but working best with those over age 13, this survey also consists of up to seven outcome questionnaires. In addition, a mechanism Bialeschki calls “retrospective pretest” measures not only outcome gains made through the camp experience, but how much of that gain is due specifically to camp.

Each questionnaire incorporates six to 14 two-part indicators. Youth respond by first circling a “status” answer on a six-point Likert scale, and then circling a “change” answer on a second six-point scale.

For example: For the first part of the indicator “I own up to my mistakes,” youth circle a “status” answer ranging “false” to “true.” Then, in response to the prompt, “Is the above statement more or less true today than before camp?” they circle a “change” answer ranging from “a lot less” to “a lot more.”

“It’s relatively new in terms of methodology, but it’s working really well,” Bialeschki said. It allows a [camp] director to get information as if they’re doing a pre-/post-test design without all of the work of that.”

Encouraging Use

While camp directors repeatedly told Bialeschki during conferences and camp visits that they were hungry for flexible and easy-to-administer tools, ACA researchers knew that solid reliability and unfettered access would determine whether camps used the tools. And ACA wanted to strongly encourage their use.

Around 2005, ACA accreditation standards began requiring camps to document goals for participants, have specific outcomes, and provide training for staff around those outcomes. It also began requiring what its standards call “written evidence of multiple sources of feedback on the accomplishment of established outcomes.”

ACA decided that, in order to model good evaluations and encourage camps to follow suit, it would make all of its evaluation tools available to the public at no charge.

In addition, it wanted people who used the tools to be able to trust the results, Bialeschki said. So ACA readily publicizes the reliability statistics for its questionnaires, as well as the methodology behind its validity testing.

It also piloted the questionnaires at several camps and weeded out questions that tested weakly.

Putting It Through Its Paces

One of those pilot sites was Sherwood Forest Camp in St. Louis, headed by Mary Rogers. The 71-year-old camp serves a diverse population of mostly low-income children from Missouri and Illinois.

Rogers asked staff members to pick two of the seven outcomes as a focus for each of the camp’s three villages, which are divided by age groups. At the end of each session, campers completed the two corresponding questionnaires.

“We got very good feedback,” Rogers said. On each indicator, at least “77 percent of the kids had noticed a change in themselves as a result of being at camp.”

The results are influencing the youth work. For example, this year Rogers asked staffers to go a step further and pick just two indicators from last year’s outcome questionnaires on which to focus. For the youngest campers, the staff will focus on friendship and exploration indicators. In order to influence one of the friendship indicators, workers have decided to have the campers play listening games, talk to a new camper each day, mix up their seating at meal times and work on finding friends in different cabins who share their interests.

“This is very much directing our programming,” Rogers said of the outcome surveys.

ACA plans to develop more outcome questionnaires as funding permits, Bialeschki said. She emphasized that the flexibility and reliability of the evaluation tools extends to other types of programs as well.

“It could be your after-school program or your church youth group,” she said. “You can take the word ‘camp’ out and put anything in there. But that’s the only word you can mess with.”

For more information, go to www.acacamps.org/research.